Being a RAC fanatic, the new Oracle 11g Release 2 Grid Infrastructure is one of my main focuses to investigate. Here is what I found to be the new features of Grid Infrastructure, which by the way comprises of Oracle Clusterware and Oracle Automatic Storage Management (ASM). All of them are truly fantastic features that are screaming to be investigated... I'll do my best to cover some of them here in the near future.

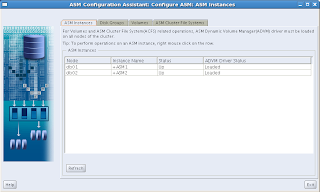

Oracle grid InfrastructureWith Oracle grid infrastructure 11g release 2 (11.2), Automatic Storage Management (ASM) and Oracle Clusterware are installed into a single home directory, which is referred to as the Grid Infrastructure home.

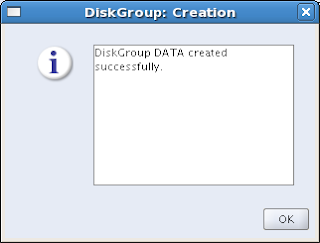

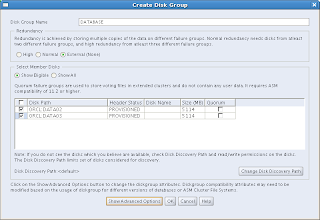

Oracle Automatic Storage Manager and Oracle filesWith this release, Oracle Cluster Registry (OCR) and voting disks can be placed on Automatic Storage Management (ASM).

This feature enables ASM to provide a unified storage solution, storing all the data for the clusterware and the database, without the need for third-party volume managers or cluster filesystems.

For new installations, OCR and voting disk files can be placed either on ASM, or on a cluster file system or NFS system.

Installing Oracle Clusterware files on raw or block devices is no longer supported, unless an existing system is being upgraded.

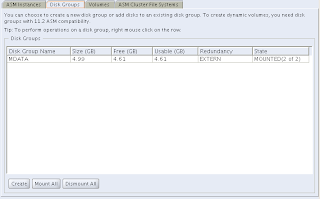

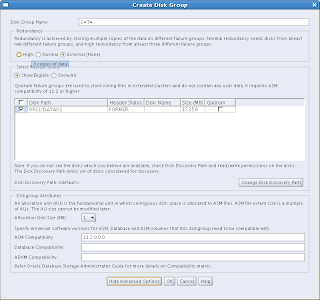

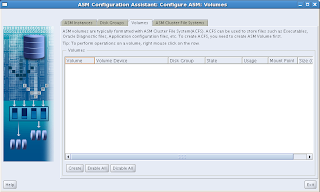

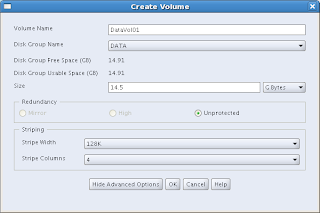

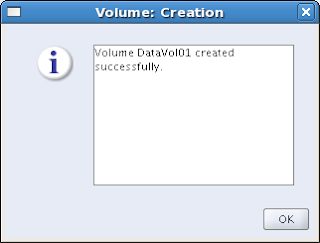

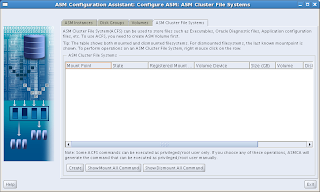

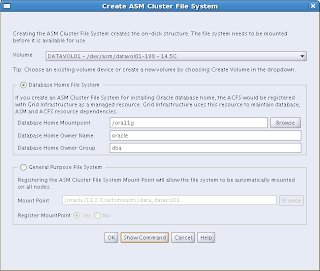

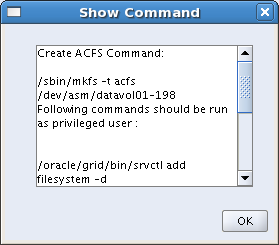

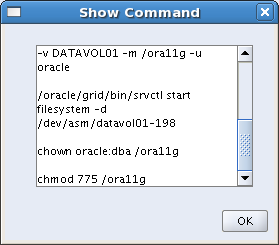

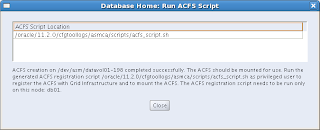

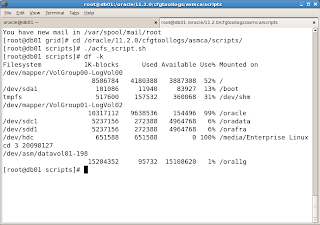

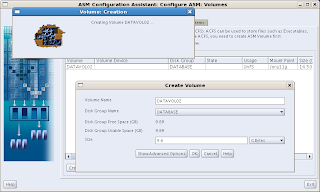

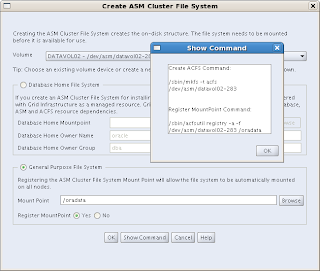

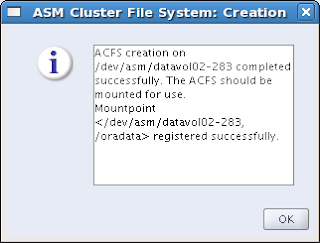

Automatic Storage Management Cluster File System (ACFS)

Oracle Automatic Storage Management Cluster File System (Oracle ACFS) is a new multi-platform, scalable file system and storage management design that extends Oracle Automatic Storage Management (ASM) technology to support all application data. Oracle ACFS provides dynamic file system resizing, and improved performance using the distribution, balancing and striping technology across all available disks, and provides storage reliability through ASM's mirroring and parity protection.

ASM Job Role Separation Option with SYSASMThe SYSASM privilege that was introduced in Oracle ASM 11g release 1 (11.1) is now fully separated from the SYSDBA privilege. If you choose to use this optional feature, and designate different operating system groups as the OSASM and the OSDBA groups, then the SYSASM administrative privilege is available only to members of the OSASM group. The SYSASM privilege also can be granted using password authentication on the Oracle ASM instance.

You can designate OPERATOR privileges (a subset of the SYSASM privileges, including starting and stopping ASM) to members of the OSOPER for ASM group.

Providing system privileges for the storage tier using the SYSASM privilege instead of the SYSDBA privilege provides a clearer division of responsibility between ASM administration and database administration, and helps to prevent different databases using the same storage from accidentally overwriting each other's files.

Cluster Time Synchronization Service

Cluster node times should be synchronized. With this release, Oracle Clusterware provides Cluster Time Synchronization Service (CTSS), which ensures that there is a synchronization service in the cluster. If Network Time Protocol (NTP) is not found during cluster configuration, then CTSS is configured to ensure time synchronization.

Enterprise Manager Database Control ProvisioningEnterprise Manager Database Control 11g provides the capability to automatically provision Oracle grid infrastructure and Oracle RAC installations on new nodes, and then extend the existing Oracle grid infrastructure and Oracle RAC database to these provisioned nodes. This provisioning procedure requires a successful Oracle RAC installation before you can use this feature.

See Also:

Oracle Real Application Clusters Administration and Deployment Guide for information about this feature.

Fixup Scripts and Grid Infrastructure Checks

With Oracle Clusterware 11g release 2 (11.2), the installer (OUI) detects when minimum requirements for installation are not completed, and creates shell script programs, called fixup scripts, to resolve many incomplete system configuration requirements. If OUI detects an incomplete task that is marked "fixable", then you can easily fix the issue by generating the fixup script by clicking the Fix & Check Again button.

The fixup script is generated during installation. You are prompted to run the script as root in a separate terminal session. When you run the script, it raises kernel values to required minimums, if necessary, and completes other operating system configuration tasks.

You also can have Cluster Verification Utility (CVU) generate fixup scripts before installation.

Grid Plug and Play

In the past, adding or removing servers in a cluster required extensive manual preparation. With this release, you can continue to configure server nodes manually, or use Grid Plug and Play to configure them dynamically as nodes are added or removed from the cluster.

Grid Plug and Play reduces the costs of installing, configuring, and managing server nodes by starting a grid naming service within the cluster to allow each node to perform the following tasks dynamically: Negotiating appropriate network identities for itself

Acquiring additional information it needs to operate from a configuration profile

Configuring or reconfiguring itself using profile data, making hostnames and addresses resolvable on the network Because servers perform these tasks dynamically, the number of steps required to add or nodes is minimized.

Intelligent Platform Management Interface (IPMI) Integration

Intelligent Platform Management Interface (IPMI) is an industry standard management protocol that is included with many servers today. IPMI operates independently of the operating system, and can operate even if the system is not powered on. Servers with IPMI contain a baseboard management controller (BMC) which is used to communicate to the server.

If IPMI is configured, then Oracle Cluster uses IPMI when node fencing is required and the server is not responding.

Oracle Clusterware Out-of-place Upgrade

With this release, you can install a new version of Oracle Clusterware into a separate home from an existing Oracle Clusterware installation. This feature reduces the downtime required to upgrade a node in the cluster. When performing an out-of-place upgrade, the old and new version of the software are present on the nodes at the same time, each in a different home location, but only one version of the software is active.

Oracle Clusterware Administration with Oracle Enterprise Manager

With this release, you can use Enterprise Manager Cluster Home page to perform full administrative and monitoring support for both standalone database and Oracle RAC environments, using High Availability Application and Oracle Cluster Resource Management.

When Oracle Enterprise Manager is installed with Oracle Clusterware, it can provide a set of users that have the Oracle Clusterware Administrator role in Enterprise Manager, and provide full administrative and monitoring support for High Availability application and Oracle Clusterware resource management. After you have completed installation and have Enterprise Manager deployed, you can provision additional nodes added to the cluster using Enterprise Manager.

SCAN for Simplified Client Access

With this release, the single client access name (SCAN) is the hostname to provide for all clients connecting to the cluster. The SCAN is a domain name registered to at least one and up to three IP addresses, either in the domain name service (DNS) or the Grid Naming Service (GNS). The SCAN eliminates the need to change clients when nodes are added to or removed from the cluster. Clients using the SCAN can also access the cluster using EZCONNECT.

SRVCTL Command Enhancements for Patching

With this release, you can use srvctl to shut down all Oracle software running within an Oracle home, in preparation for patching. Oracle grid infrastructure patching is automated across all nodes, and patches can be applied in a multi-node, multi-patch fashion.

Typical Installation Option

To streamline cluster installations, especially for those customers who are new to clustering, Oracle introduces the Typical Installation path. Typical installation defaults as many options as possible to those recommended as best practices.

Voting Disk Backup Procedure Change

In prior releases, backing up the voting disks using a dd command was a required postinstallation task. With Oracle Clusterware release 11.2 and later, backing up and restoring a voting disk using the dd command is not supported.

Backing up voting disks manually is no longer required, as voting disks are backed up automatically in the OCR as part of any configuration change and voting disk data is automatically restored to any added voting disks.